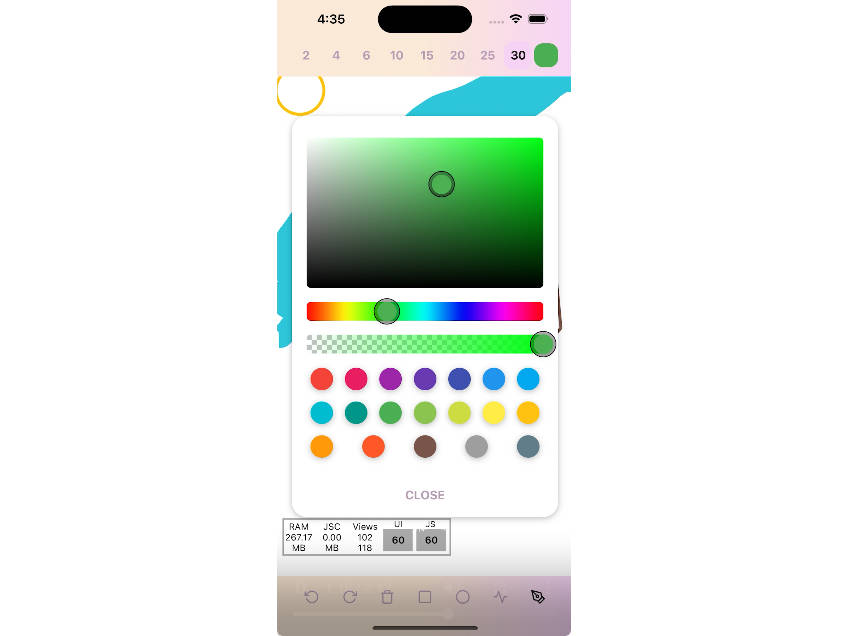

An app to detect colorwaves (swatches/palettes) in the real world – powered by VisionCamera and Reanimated.

I wrote this app in less than a day, a speed simply not matched by native app development. Because it’s written in React Native (TypeScript/JavaScript), it runs both on iOS and Android, but performance critical parts (e.g. the image processing algorithm or the animations) are backed by native Objective-C/Swift/Java code.

See this Tweet for more information.

|

|

|

Try it!

Download the repository and run the following commands to try Colorwaver for yourself:

iOS

yarn try-ios

Android

yarn try-android

Project structure

This is a bare React Native project, created with create-react-native-app.

- ?

src: Contains the actual TypeScript + React (-Native) front-end for the Colorwaver App.-

?

src/getColorPalette.ts: Exposes the native iOS/Android frame processor plugin as a JS function with TypeScript types. This function has to be called from a frame processor. ('worklet') -

?

src/Router.tsx: The main component that gets registered byindex.js. This acts as a main navigator, navigating either to the Splash Page (Permissions) or the App Page (Main App) depending on whether the user has granted permissions or not. -

?

src/Splash.tsx: The first Splash page to ask the user for Camera permission. -

?

src/App.tsx: Contains the actual source code for the App’s front-end.- VisionCamera is used to get a Camera device and display a Camera component. Also, a frame processor (a function that gets called for every frame the camera “sees”) gets attached, which calls the native iOS/Android frame processor plugin.

- Reanimated is used to smoothly animate between color changes.

Because VisionCamera also uses the Worklet API, the entire process between receiving a camera frame and actually displaying the palette’s colors does not use the React-JS Thread at all.

The frame processing runs on a separate Thread from VisionCamera, which then dispatches the animations on the Reanimated UI Thread.

This is why the App runs as smooth as a native iOS or Android app. -

?

src/useAnimatedColor.ts: A helper function to animate color changes withSharedValues.

-

- ?

ios: Contains the basic skeleton for a React Native iOS app, plus the nativegetColorPalette(...)Frame Processor Plugin.-

?

ios/PaletteFrameProcessorPlugin.m: Declares the Swift frame processor plugin “getColorPalette(...)“. -

?

ios/PaletteFrameProcessorPlugin.swift: Contains the actual Swift code for the native iOS frame processor plugin “getColorPalette(...)“.This uses the CoreImage API to convert the

CMSampleBufferto aUIImage, and then uses theUIImageColorslibrary to build the color palette.VisionCamera’s frame processor API is used to expose this function as a frame processor plugin.

-

?

ios/Colorwaver-Bridging-Header.h: A Bridging Header to import Objective-C headers into Swift. -

?

ios/Podfile: Adds theUIImageColorslibrary. -

?

ios/UIColor+hexString.swift: An extension forUIColorto convertUIColorinstances to strings. This is required because React Native handles colors as strings.

-

- ?

android: Contains the basic skeleton for a React Native Android app, plus the nativegetColorPalette(...)Frame Processor Plugin.-

?

android/app/build.gradle: The gradle build file for the Android project. The following third-party dependencies are installed:androidx.camera:camera-coreandroidx.palette:palette

-

?

android/app/src/main/java/com/colorwaver/utils: Contains two files copied from android/camera-samples to convertImageProxyinstances toBitmaps. (YuvToRgbConverter.kt) -

?

android/app/src/main/java/com/colorwaver: Contains the actual Java source code of the Project. -

?

android/app/src/main/java/com/colorwaver/MainApplication.java: Sets up react-native-reanimated. -

?

android/app/src/main/java/com/colorwaver/MainActivity.java: Installs thePaletteFrameProcessorPluginframe processor plugin inside of theonCreatemethod. -

?

android/app/src/main/java/com/colorwaver/PaletteFrameProcessorPlugin.java: Contains the actual Java code for the native Android frame processor plugin “getColorPalette(...)“.This uses the

YuvToRgbConverterto convert theImageProxyto aBitmap, and then passes that to the Palette API from AndroidX to build the color palette.VisionCamera’s frame processor API is used to expose this function as a frame processor plugin.

-

- ?

babel.config.js: Adds the native frame processor plugingetColorPalette(called__getColorPalette) to Reanimated’sgloballist.

Credits

- @Simek for the original idea

- react-native-reanimated for allowing JS code to be dispatched to another Runtime (Worklets)

- react-native-vision-camera for allowing you to run JS code on a realtime feed of Camera Frames (Frame Processors)

- The Palette API from AndroidX for getting the Color Palette on Android

UIImageColorsfor getting the Color Palette on iOS- You guys, for downloading, giving 5 stars, and pushing Colorwaver up in the Charts.